Project: Covid19 classifier based on X-rays lung image

Published:

This blog post is about a project that focuses on building a deep learning model to predict whether patients have pneumonia, Covid-19, or no illness based on X-ray lung scans. The project is compatible with Google Colaboratory and uses TensorFlow 2.8.2. The objective of the project is to create a Covid-19 classifier using deep learning. The step-by-step instructions on how to preprocess the data, build the model architecture, and train and evaluate the model are provided.

The post is compatible with Google Colaboratory and can be accessed through this link:

Project: Covid19 classifier based on X-rays lung image

- Developed by Armin Norouzi

Compatible with Google Colaboratory- Tensorflow 2.8.2

- Objective: Build a deep learning model to predict that patients who had either pneumonia, Covid-19, or no illness based on X-ray lung scans.

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras.models import Sequential

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from sklearn.metrics import classification_report

from sklearn.metrics import confusion_matrix

from tensorflow.keras.callbacks import EarlyStopping

from tensorflow.keras import layers

import matplotlib.pyplot as plt

import numpy

Geting data and being familiar with data

Get the data

import zipfile

# Download zip file of pizza_steak images

!wget https://gitlab.com/arminny/ml_course_datasets/-/raw/main/Covid19-dataset.zip

# Unzip the downloaded file

zip_ref = zipfile.ZipFile("Covid19-dataset.zip", "r")

zip_ref.extractall()

zip_ref.close()

--2022-09-21 16:28:24-- https://gitlab.com/arminny/ml_course_datasets/-/raw/main/Covid19-dataset.zip

Resolving gitlab.com (gitlab.com)... 172.65.251.78, 2606:4700:90:0:f22e:fbec:5bed:a9b9

Connecting to gitlab.com (gitlab.com)|172.65.251.78|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 21847859 (21M) [application/octet-stream]

Saving to: ‘Covid19-dataset.zip’

Covid19-dataset.zip 100%[===================>] 20.83M 63.9MB/s in 0.3s

2022-09-21 16:28:25 (63.9 MB/s) - ‘Covid19-dataset.zip’ saved [21847859/21847859]

!ls Covid19-dataset

test train

We can see we’ve got a train and test folder.

Let’s see what’s inside one of them.

!ls Covid19-dataset/train/

Covid Normal Pneumonia

import os

# Walk through pizza_steak directory and list number of files

for dirpath, dirnames, filenames in os.walk("Covid19-dataset"):

print(f"There are {len(dirnames)} directories and {len(filenames)} images in '{dirpath}'.")

There are 2 directories and 1 images in 'Covid19-dataset'.

There are 3 directories and 1 images in 'Covid19-dataset/test'.

There are 0 directories and 26 images in 'Covid19-dataset/test/Covid'.

There are 0 directories and 20 images in 'Covid19-dataset/test/Normal'.

There are 0 directories and 20 images in 'Covid19-dataset/test/Pneumonia'.

There are 3 directories and 1 images in 'Covid19-dataset/train'.

There are 0 directories and 111 images in 'Covid19-dataset/train/Covid'.

There are 0 directories and 70 images in 'Covid19-dataset/train/Normal'.

There are 0 directories and 70 images in 'Covid19-dataset/train/Pneumonia'.

# Get the class names

import pathlib

import numpy as np

data_dir = pathlib.Path("Covid19-dataset/train/") # turn our training path into a Python path

class_names = np.array(sorted([item.name for item in data_dir.glob('*')])) # created a list of class_names from the subdirectories

class_names = class_names[1:]

print(class_names)

['Covid' 'Normal' 'Pneumonia']

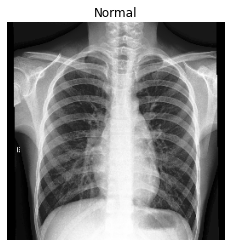

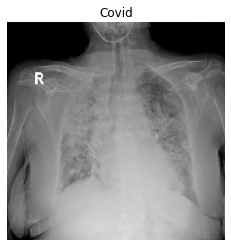

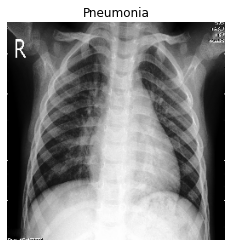

# View an image

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

import random

def view_random_image(target_dir, target_class):

# Setup target directory (we'll view images from here)

target_folder = target_dir+target_class

# Get a random image path

random_image = random.sample(os.listdir(target_folder), 1)

# Read in the image and plot it using matplotlib

img = mpimg.imread(target_folder + "/" + random_image[0])

plt.imshow(img)

plt.title(target_class)

plt.axis("off");

print(f"Image shape: {img.shape}") # show the shape of the image

return img

# View a random image from the training dataset

img = view_random_image(target_dir="Covid19-dataset/train/",

target_class="Normal")

Image shape: (256, 256, 4)

# View a random image from the training dataset

img = view_random_image(target_dir="Covid19-dataset/train/",

target_class="Covid")

Image shape: (256, 256, 4)

# View a random image from the training dataset

img = view_random_image(target_dir="Covid19-dataset/train/",

target_class="Pneumonia")

Image shape: (256, 256, 4)

# View the image shape

img.shape # returns (width, height, colour channels)

(256, 256, 4)

Prepare data

#Construct an ImageDataGenerator object:

DIRECTORY = "Covid19-dataset/train/"

CLASS_MODE = "categorical"

COLOR_MODE = "grayscale"

TARGET_SIZE = (256,256)

Looking at the image shape more closely, you’ll see it’s in the form (Width, Height, Colour Channels).

BATCH_SIZE = 32

training_data_generator = ImageDataGenerator(rescale= 1/255,

zoom_range = 0.1,

rotation_range = 25,

height_shift_range = 0.05,

width_shift_range = 0.05)

validation_data_generator = ImageDataGenerator(rescale= 1/255)

training_iterator = training_data_generator.flow_from_directory(directory= "Covid19-dataset/train/",

target_size=(256, 256),

color_mode='grayscale',

class_mode='categorical',

batch_size=BATCH_SIZE)

validation_iterator = validation_data_generator.flow_from_directory(directory= "Covid19-dataset/test/",

target_size=(256, 256),

color_mode='grayscale',

class_mode='categorical',

batch_size=BATCH_SIZE)

Found 251 images belonging to 3 classes.

Found 66 images belonging to 3 classes.

Model structure

from tensorflow.keras.layers import Dense, Flatten, Conv2D, MaxPool2D, Dropout

from tensorflow.keras.optimizers import Adam

from tensorflow.keras import Sequential

from tensorflow.keras import losses

def model_structure():

model = Sequential()

model.add(Conv2D(10, 3, padding='same', input_shape=(256,256, 1), name = "Conv_1", activation="relu"))

model.add(Conv2D(10, 3, padding='same', name = "Conv_2", activation="relu"))

model.add(MaxPool2D(2, name = "maxpool_1"))

model.add(Conv2D(10, 5, padding='same', name = "Conv_3", activation="relu"))

model.add(Conv2D(10, 5, padding='same', name = "Conv_4", activation="relu"))

model.add(MaxPool2D(2, name = "maxpool_2"))

model.add(Conv2D(15, 7, padding='same', name = "Conv_5", activation="relu"))

model.add(MaxPool2D(2, name = "maxpool_3"))

model.add(Flatten())

model.add(Dense(3, activation="softmax"))

model.compile(optimizer = Adam(learning_rate=0.0001),

loss = losses.CategoricalCrossentropy(),

metrics=["accuracy"])

model.summary()

return model

model = model_structure()

Model: "sequential_6"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

Conv_1 (Conv2D) (None, 256, 256, 10) 100

Conv_2 (Conv2D) (None, 256, 256, 10) 910

maxpool_1 (MaxPooling2D) (None, 128, 128, 10) 0

Conv_3 (Conv2D) (None, 128, 128, 10) 2510

Conv_4 (Conv2D) (None, 128, 128, 10) 2510

maxpool_2 (MaxPooling2D) (None, 64, 64, 10) 0

Conv_5 (Conv2D) (None, 64, 64, 15) 7365

maxpool_3 (MaxPooling2D) (None, 32, 32, 15) 0

flatten_5 (Flatten) (None, 15360) 0

dense_5 (Dense) (None, 3) 46083

=================================================================

Total params: 59,478

Trainable params: 59,478

Non-trainable params: 0

_________________________________________________________________

Training

history = model.fit(training_iterator,

epochs = 100,

batch_size = BATCH_SIZE,

verbose = 1,

validation_data= validation_iterator)

Epoch 1/100

8/8 [==============================] - 3s 306ms/step - loss: 1.0960 - accuracy: 0.3466 - val_loss: 1.0890 - val_accuracy: 0.3939

Epoch 2/100

8/8 [==============================] - 2s 265ms/step - loss: 1.0762 - accuracy: 0.4422 - val_loss: 1.0753 - val_accuracy: 0.3939

Epoch 3/100

8/8 [==============================] - 2s 264ms/step - loss: 1.0552 - accuracy: 0.4422 - val_loss: 1.0591 - val_accuracy: 0.3939

Epoch 4/100

8/8 [==============================] - 2s 265ms/step - loss: 1.0247 - accuracy: 0.4422 - val_loss: 1.0165 - val_accuracy: 0.3939

Epoch 5/100

8/8 [==============================] - 2s 263ms/step - loss: 0.9731 - accuracy: 0.4661 - val_loss: 0.9445 - val_accuracy: 0.6212

Epoch 6/100

8/8 [==============================] - 2s 262ms/step - loss: 0.8888 - accuracy: 0.6255 - val_loss: 0.8396 - val_accuracy: 0.7273

Epoch 7/100

8/8 [==============================] - 2s 262ms/step - loss: 0.7710 - accuracy: 0.7331 - val_loss: 0.7271 - val_accuracy: 0.7121

Epoch 8/100

8/8 [==============================] - 2s 259ms/step - loss: 0.6256 - accuracy: 0.8127 - val_loss: 0.6646 - val_accuracy: 0.6970

Epoch 9/100

8/8 [==============================] - 2s 266ms/step - loss: 0.5209 - accuracy: 0.8167 - val_loss: 0.5964 - val_accuracy: 0.7121

Epoch 10/100

8/8 [==============================] - 2s 263ms/step - loss: 0.4598 - accuracy: 0.8446 - val_loss: 0.6125 - val_accuracy: 0.6818

Epoch 11/100

8/8 [==============================] - 2s 276ms/step - loss: 0.4147 - accuracy: 0.8566 - val_loss: 0.5854 - val_accuracy: 0.7424

Epoch 12/100

8/8 [==============================] - 2s 271ms/step - loss: 0.3614 - accuracy: 0.8606 - val_loss: 0.6405 - val_accuracy: 0.7121

Epoch 13/100

8/8 [==============================] - 2s 266ms/step - loss: 0.3243 - accuracy: 0.8805 - val_loss: 0.5697 - val_accuracy: 0.7424

Epoch 14/100

8/8 [==============================] - 2s 264ms/step - loss: 0.3975 - accuracy: 0.8446 - val_loss: 0.5427 - val_accuracy: 0.7576

Epoch 15/100

8/8 [==============================] - 2s 269ms/step - loss: 0.3453 - accuracy: 0.8606 - val_loss: 0.6043 - val_accuracy: 0.7424

Epoch 16/100

8/8 [==============================] - 2s 264ms/step - loss: 0.3489 - accuracy: 0.8765 - val_loss: 0.5033 - val_accuracy: 0.7727

Epoch 17/100

8/8 [==============================] - 2s 265ms/step - loss: 0.3358 - accuracy: 0.8606 - val_loss: 0.7202 - val_accuracy: 0.7273

Epoch 18/100

8/8 [==============================] - 2s 273ms/step - loss: 0.3518 - accuracy: 0.8765 - val_loss: 0.5462 - val_accuracy: 0.7576

Epoch 19/100

8/8 [==============================] - 2s 266ms/step - loss: 0.3099 - accuracy: 0.8845 - val_loss: 0.4603 - val_accuracy: 0.8030

Epoch 20/100

8/8 [==============================] - 2s 265ms/step - loss: 0.2534 - accuracy: 0.9203 - val_loss: 0.4926 - val_accuracy: 0.7879

Epoch 21/100

8/8 [==============================] - 2s 264ms/step - loss: 0.2760 - accuracy: 0.9084 - val_loss: 0.4660 - val_accuracy: 0.7879

Epoch 22/100

8/8 [==============================] - 2s 267ms/step - loss: 0.2380 - accuracy: 0.9004 - val_loss: 0.4541 - val_accuracy: 0.8030

Epoch 23/100

8/8 [==============================] - 2s 267ms/step - loss: 0.2664 - accuracy: 0.8964 - val_loss: 0.4381 - val_accuracy: 0.8030

Epoch 24/100

8/8 [==============================] - 2s 266ms/step - loss: 0.2927 - accuracy: 0.8924 - val_loss: 0.4307 - val_accuracy: 0.8182

Epoch 25/100

8/8 [==============================] - 2s 271ms/step - loss: 0.3163 - accuracy: 0.8645 - val_loss: 0.4715 - val_accuracy: 0.7879

Epoch 26/100

8/8 [==============================] - 2s 263ms/step - loss: 0.2634 - accuracy: 0.8964 - val_loss: 0.4497 - val_accuracy: 0.8030

Epoch 27/100

8/8 [==============================] - 2s 267ms/step - loss: 0.3057 - accuracy: 0.8805 - val_loss: 0.4060 - val_accuracy: 0.8030

Epoch 28/100

8/8 [==============================] - 2s 263ms/step - loss: 0.2516 - accuracy: 0.9124 - val_loss: 0.4946 - val_accuracy: 0.7879

Epoch 29/100

8/8 [==============================] - 2s 264ms/step - loss: 0.2905 - accuracy: 0.8725 - val_loss: 0.3828 - val_accuracy: 0.8333

Epoch 30/100

8/8 [==============================] - 2s 266ms/step - loss: 0.2703 - accuracy: 0.8884 - val_loss: 0.4362 - val_accuracy: 0.8030

Epoch 31/100

8/8 [==============================] - 2s 266ms/step - loss: 0.2255 - accuracy: 0.9283 - val_loss: 0.3962 - val_accuracy: 0.8485

Epoch 32/100

8/8 [==============================] - 2s 268ms/step - loss: 0.2374 - accuracy: 0.9084 - val_loss: 0.3697 - val_accuracy: 0.8333

Epoch 33/100

8/8 [==============================] - 2s 270ms/step - loss: 0.2240 - accuracy: 0.9084 - val_loss: 0.4175 - val_accuracy: 0.8182

Epoch 34/100

8/8 [==============================] - 2s 266ms/step - loss: 0.2663 - accuracy: 0.9084 - val_loss: 0.3784 - val_accuracy: 0.8636

Epoch 35/100

8/8 [==============================] - 2s 269ms/step - loss: 0.2286 - accuracy: 0.9124 - val_loss: 0.4117 - val_accuracy: 0.8485

Epoch 36/100

8/8 [==============================] - 2s 260ms/step - loss: 0.2512 - accuracy: 0.9004 - val_loss: 0.4037 - val_accuracy: 0.8485

Epoch 37/100

8/8 [==============================] - 2s 263ms/step - loss: 0.2376 - accuracy: 0.8964 - val_loss: 0.3816 - val_accuracy: 0.8182

Epoch 38/100

8/8 [==============================] - 2s 259ms/step - loss: 0.2597 - accuracy: 0.9004 - val_loss: 0.3911 - val_accuracy: 0.8485

Epoch 39/100

8/8 [==============================] - 2s 264ms/step - loss: 0.2134 - accuracy: 0.9163 - val_loss: 0.3685 - val_accuracy: 0.8485

Epoch 40/100

8/8 [==============================] - 2s 265ms/step - loss: 0.2036 - accuracy: 0.9323 - val_loss: 0.3927 - val_accuracy: 0.8485

Epoch 41/100

8/8 [==============================] - 2s 263ms/step - loss: 0.2338 - accuracy: 0.9044 - val_loss: 0.3909 - val_accuracy: 0.8333

Epoch 42/100

8/8 [==============================] - 2s 263ms/step - loss: 0.1750 - accuracy: 0.9243 - val_loss: 0.3673 - val_accuracy: 0.8485

Epoch 43/100

8/8 [==============================] - 2s 260ms/step - loss: 0.1910 - accuracy: 0.9323 - val_loss: 0.3519 - val_accuracy: 0.8485

Epoch 44/100

8/8 [==============================] - 2s 261ms/step - loss: 0.1760 - accuracy: 0.9203 - val_loss: 0.3569 - val_accuracy: 0.8636

Epoch 45/100

8/8 [==============================] - 2s 262ms/step - loss: 0.1858 - accuracy: 0.9283 - val_loss: 0.3348 - val_accuracy: 0.8939

Epoch 46/100

8/8 [==============================] - 2s 268ms/step - loss: 0.2281 - accuracy: 0.9283 - val_loss: 0.3721 - val_accuracy: 0.8788

Epoch 47/100

8/8 [==============================] - 2s 260ms/step - loss: 0.1942 - accuracy: 0.9084 - val_loss: 0.3237 - val_accuracy: 0.8939

Epoch 48/100

8/8 [==============================] - 2s 261ms/step - loss: 0.2121 - accuracy: 0.9243 - val_loss: 0.3331 - val_accuracy: 0.8788

Epoch 49/100

8/8 [==============================] - 2s 259ms/step - loss: 0.1960 - accuracy: 0.9163 - val_loss: 0.3011 - val_accuracy: 0.8788

Epoch 50/100

8/8 [==============================] - 2s 257ms/step - loss: 0.2081 - accuracy: 0.9084 - val_loss: 0.2999 - val_accuracy: 0.9091

Epoch 51/100

8/8 [==============================] - 2s 269ms/step - loss: 0.2003 - accuracy: 0.9323 - val_loss: 0.3071 - val_accuracy: 0.8939

Epoch 52/100

8/8 [==============================] - 2s 258ms/step - loss: 0.2049 - accuracy: 0.9163 - val_loss: 0.3124 - val_accuracy: 0.8636

Epoch 53/100

8/8 [==============================] - 2s 260ms/step - loss: 0.1966 - accuracy: 0.9283 - val_loss: 0.3114 - val_accuracy: 0.8788

Epoch 54/100

8/8 [==============================] - 2s 260ms/step - loss: 0.1685 - accuracy: 0.9402 - val_loss: 0.2999 - val_accuracy: 0.8788

Epoch 55/100

8/8 [==============================] - 2s 258ms/step - loss: 0.1900 - accuracy: 0.9442 - val_loss: 0.3265 - val_accuracy: 0.8788

Epoch 56/100

8/8 [==============================] - 2s 267ms/step - loss: 0.1994 - accuracy: 0.9163 - val_loss: 0.2780 - val_accuracy: 0.8788

Epoch 57/100

8/8 [==============================] - 2s 259ms/step - loss: 0.1913 - accuracy: 0.9323 - val_loss: 0.2797 - val_accuracy: 0.8939

Epoch 58/100

8/8 [==============================] - 2s 264ms/step - loss: 0.2055 - accuracy: 0.9124 - val_loss: 0.3114 - val_accuracy: 0.8939

Epoch 59/100

8/8 [==============================] - 2s 260ms/step - loss: 0.1938 - accuracy: 0.9442 - val_loss: 0.2733 - val_accuracy: 0.8939

Epoch 60/100

8/8 [==============================] - 2s 266ms/step - loss: 0.1902 - accuracy: 0.9283 - val_loss: 0.2676 - val_accuracy: 0.8939

Epoch 61/100

8/8 [==============================] - 2s 269ms/step - loss: 0.1739 - accuracy: 0.9243 - val_loss: 0.2900 - val_accuracy: 0.9091

Epoch 62/100

8/8 [==============================] - 2s 264ms/step - loss: 0.1613 - accuracy: 0.9402 - val_loss: 0.2720 - val_accuracy: 0.9091

Epoch 63/100

8/8 [==============================] - 2s 263ms/step - loss: 0.2034 - accuracy: 0.9163 - val_loss: 0.3617 - val_accuracy: 0.8636

Epoch 64/100

8/8 [==============================] - 2s 266ms/step - loss: 0.1798 - accuracy: 0.9363 - val_loss: 0.3147 - val_accuracy: 0.8333

Epoch 65/100

8/8 [==============================] - 2s 263ms/step - loss: 0.2301 - accuracy: 0.9163 - val_loss: 0.2789 - val_accuracy: 0.8788

Epoch 66/100

8/8 [==============================] - 2s 266ms/step - loss: 0.2055 - accuracy: 0.9124 - val_loss: 0.2814 - val_accuracy: 0.8788

Epoch 67/100

8/8 [==============================] - 2s 263ms/step - loss: 0.1701 - accuracy: 0.9283 - val_loss: 0.2794 - val_accuracy: 0.8788

Epoch 68/100

8/8 [==============================] - 2s 266ms/step - loss: 0.1793 - accuracy: 0.9363 - val_loss: 0.3022 - val_accuracy: 0.8788

Epoch 69/100

8/8 [==============================] - 2s 262ms/step - loss: 0.1818 - accuracy: 0.9283 - val_loss: 0.2742 - val_accuracy: 0.8636

Epoch 70/100

8/8 [==============================] - 2s 269ms/step - loss: 0.1815 - accuracy: 0.9243 - val_loss: 0.2666 - val_accuracy: 0.8939

Epoch 71/100

8/8 [==============================] - 2s 261ms/step - loss: 0.1681 - accuracy: 0.9402 - val_loss: 0.3037 - val_accuracy: 0.8485

Epoch 72/100

8/8 [==============================] - 2s 262ms/step - loss: 0.1708 - accuracy: 0.9203 - val_loss: 0.2617 - val_accuracy: 0.8939

Epoch 73/100

8/8 [==============================] - 2s 262ms/step - loss: 0.2125 - accuracy: 0.9203 - val_loss: 0.3488 - val_accuracy: 0.8485

Epoch 74/100

8/8 [==============================] - 2s 261ms/step - loss: 0.1378 - accuracy: 0.9522 - val_loss: 0.2408 - val_accuracy: 0.9091

Epoch 75/100

8/8 [==============================] - 2s 264ms/step - loss: 0.1985 - accuracy: 0.9442 - val_loss: 0.2302 - val_accuracy: 0.8939

Epoch 76/100

8/8 [==============================] - 2s 261ms/step - loss: 0.1670 - accuracy: 0.9442 - val_loss: 0.2272 - val_accuracy: 0.8788

Epoch 77/100

8/8 [==============================] - 2s 259ms/step - loss: 0.1449 - accuracy: 0.9323 - val_loss: 0.2579 - val_accuracy: 0.8939

Epoch 78/100

8/8 [==============================] - 2s 260ms/step - loss: 0.2069 - accuracy: 0.9243 - val_loss: 0.3032 - val_accuracy: 0.8788

Epoch 79/100

8/8 [==============================] - 2s 267ms/step - loss: 0.2019 - accuracy: 0.8964 - val_loss: 0.2782 - val_accuracy: 0.9091

Epoch 80/100

8/8 [==============================] - 2s 263ms/step - loss: 0.1571 - accuracy: 0.9482 - val_loss: 0.2639 - val_accuracy: 0.8788

Epoch 81/100

8/8 [==============================] - 2s 263ms/step - loss: 0.1528 - accuracy: 0.9522 - val_loss: 0.2639 - val_accuracy: 0.8788

Epoch 82/100

8/8 [==============================] - 2s 260ms/step - loss: 0.1985 - accuracy: 0.9203 - val_loss: 0.2738 - val_accuracy: 0.8788

Epoch 83/100

8/8 [==============================] - 2s 265ms/step - loss: 0.1671 - accuracy: 0.9363 - val_loss: 0.2736 - val_accuracy: 0.8636

Epoch 84/100

8/8 [==============================] - 2s 265ms/step - loss: 0.1401 - accuracy: 0.9482 - val_loss: 0.2672 - val_accuracy: 0.8939

Epoch 85/100

8/8 [==============================] - 2s 262ms/step - loss: 0.1474 - accuracy: 0.9562 - val_loss: 0.2559 - val_accuracy: 0.8788

Epoch 86/100

8/8 [==============================] - 2s 264ms/step - loss: 0.1552 - accuracy: 0.9482 - val_loss: 0.2325 - val_accuracy: 0.8788

Epoch 87/100

8/8 [==============================] - 2s 266ms/step - loss: 0.1568 - accuracy: 0.9482 - val_loss: 0.2525 - val_accuracy: 0.8788

Epoch 88/100

8/8 [==============================] - 2s 263ms/step - loss: 0.1660 - accuracy: 0.9482 - val_loss: 0.2424 - val_accuracy: 0.8636

Epoch 89/100

8/8 [==============================] - 2s 264ms/step - loss: 0.1152 - accuracy: 0.9522 - val_loss: 0.2510 - val_accuracy: 0.8788

Epoch 90/100

8/8 [==============================] - 2s 264ms/step - loss: 0.1437 - accuracy: 0.9482 - val_loss: 0.2698 - val_accuracy: 0.8788

Epoch 91/100

8/8 [==============================] - 2s 265ms/step - loss: 0.1401 - accuracy: 0.9442 - val_loss: 0.2759 - val_accuracy: 0.8636

Epoch 92/100

8/8 [==============================] - 2s 261ms/step - loss: 0.1155 - accuracy: 0.9681 - val_loss: 0.2367 - val_accuracy: 0.8788

Epoch 93/100

8/8 [==============================] - 2s 262ms/step - loss: 0.1585 - accuracy: 0.9283 - val_loss: 0.3214 - val_accuracy: 0.8485

Epoch 94/100

8/8 [==============================] - 2s 267ms/step - loss: 0.2070 - accuracy: 0.9243 - val_loss: 0.3235 - val_accuracy: 0.8788

Epoch 95/100

8/8 [==============================] - 2s 255ms/step - loss: 0.1957 - accuracy: 0.9124 - val_loss: 0.4249 - val_accuracy: 0.8485

Epoch 96/100

8/8 [==============================] - 2s 262ms/step - loss: 0.1821 - accuracy: 0.9203 - val_loss: 0.3548 - val_accuracy: 0.8333

Epoch 97/100

8/8 [==============================] - 2s 255ms/step - loss: 0.1278 - accuracy: 0.9681 - val_loss: 0.3206 - val_accuracy: 0.8788

Epoch 98/100

8/8 [==============================] - 2s 265ms/step - loss: 0.1440 - accuracy: 0.9442 - val_loss: 0.3125 - val_accuracy: 0.8636

Epoch 99/100

8/8 [==============================] - 2s 267ms/step - loss: 0.1409 - accuracy: 0.9363 - val_loss: 0.3066 - val_accuracy: 0.8636

Epoch 100/100

8/8 [==============================] - 2s 256ms/step - loss: 0.1262 - accuracy: 0.9442 - val_loss: 0.2725 - val_accuracy: 0.8636

history.history.keys()

dict_keys(['loss', 'accuracy', 'val_loss', 'val_accuracy'])

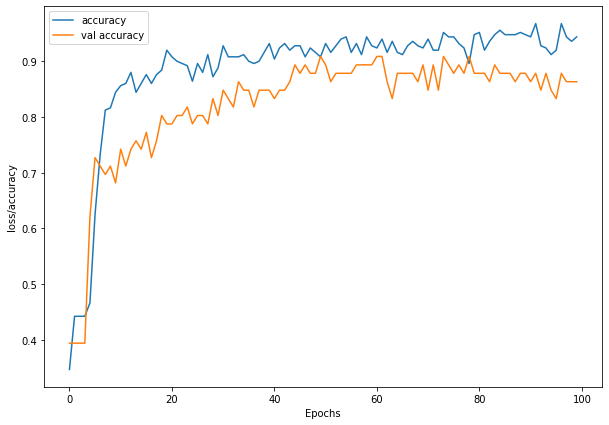

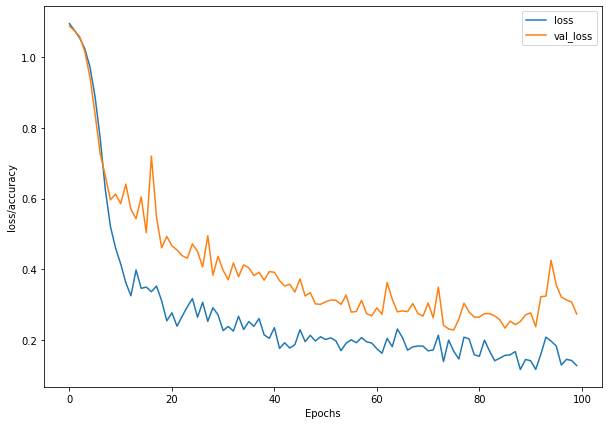

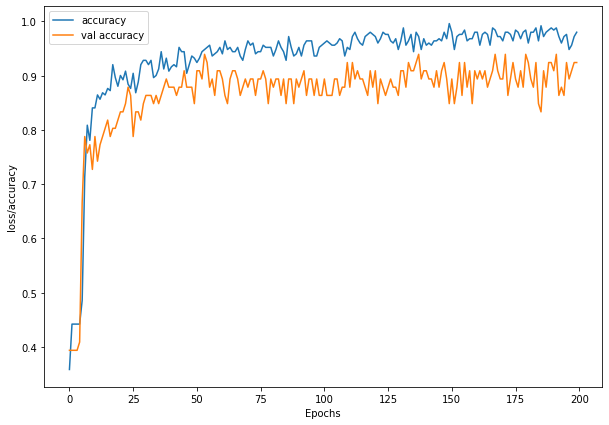

import matplotlib.pyplot as plt

plt.figure(figsize=(10, 7))

plt.plot(history.history['accuracy'], label = "accuracy")

plt.plot(history.history['val_accuracy'], label= "val accuracy")

plt.xlabel("Epochs")

plt.ylabel("loss/accuracy")

plt.legend()

plt.show()

plt.figure(figsize=(10, 7))

plt.plot(history.history['loss'], label = "loss")

plt.plot(history.history['val_loss'], label= "val_loss")

plt.xlabel("Epochs")

plt.ylabel("loss/accuracy")

plt.legend()

plt.show()

model.evaluate(validation_iterator)

3/3 [==============================] - 0s 70ms/step - loss: 0.2725 - accuracy: 0.8636

[0.2724951207637787, 0.8636363744735718]

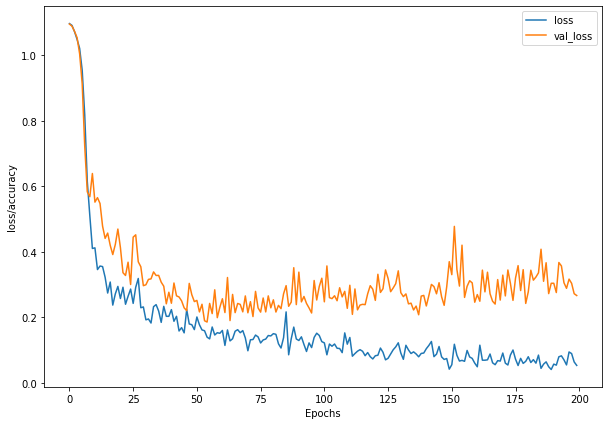

fine tunning model

def train_plot_model(model, Epochs):

history = model.fit(training_iterator,

epochs = Epochs,

batch_size = BATCH_SIZE,

verbose = 0,

validation_data= validation_iterator)

plt.figure(figsize=(10, 7))

plt.plot(history.history['accuracy'], label = "accuracy")

plt.plot(history.history['val_accuracy'], label= "val accuracy")

plt.xlabel("Epochs")

plt.ylabel("loss/accuracy")

plt.legend()

plt.show()

plt.figure(figsize=(10, 7))

plt.plot(history.history['loss'], label = "loss")

plt.plot(history.history['val_loss'], label= "val_loss")

plt.xlabel("Epochs")

plt.ylabel("loss/accuracy")

plt.legend()

plt.show()

return history, model

def model_structure_2():

model = Sequential()

model.add(Conv2D(10, 3, padding='same', input_shape=(256,256, 1), name = "Conv_1", activation="relu"))

model.add(Conv2D(10, 3, padding='same', name = "Conv_2", activation="relu"))

model.add(MaxPool2D(2, name = "maxpool_1"))

model.add(Conv2D(10, 5, padding='same', name = "Conv_3", activation="relu"))

model.add(Conv2D(10, 5, padding='same', name = "Conv_4", activation="relu"))

model.add(MaxPool2D(2, name = "maxpool_2"))

model.add(Conv2D(20, 7, padding='same', name = "Conv_5", activation="relu"))

model.add(Conv2D(20, 7, padding='same', name = "Conv_6", activation="relu"))

model.add(MaxPool2D(2, name = "maxpool_3"))

model.add(Conv2D(25, 7, padding='same', name = "Conv_7", activation="relu"))

model.add(MaxPool2D(2, name = "maxpool_4"))

model.add(Flatten())

model.add(Dense(3, activation="softmax"))

model.compile(optimizer = Adam(learning_rate=0.0001),

loss = losses.CategoricalCrossentropy(),

metrics=["accuracy"])

model.summary()

return model

model_2 = model_structure_2()

Model: "sequential_10"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

Conv_1 (Conv2D) (None, 256, 256, 10) 100

Conv_2 (Conv2D) (None, 256, 256, 10) 910

maxpool_1 (MaxPooling2D) (None, 128, 128, 10) 0

Conv_3 (Conv2D) (None, 128, 128, 10) 2510

Conv_4 (Conv2D) (None, 128, 128, 10) 2510

maxpool_2 (MaxPooling2D) (None, 64, 64, 10) 0

Conv_5 (Conv2D) (None, 64, 64, 20) 9820

Conv_6 (Conv2D) (None, 64, 64, 20) 19620

maxpool_3 (MaxPooling2D) (None, 32, 32, 20) 0

Conv_7 (Conv2D) (None, 32, 32, 25) 24525

maxpool_4 (MaxPooling2D) (None, 16, 16, 25) 0

flatten_9 (Flatten) (None, 6400) 0

dense_9 (Dense) (None, 3) 19203

=================================================================

Total params: 79,198

Trainable params: 79,198

Non-trainable params: 0

_________________________________________________________________

history_2, model_2 = train_plot_model(model_2, 200)

model_2.evaluate(validation_iterator)

3/3 [==============================] - 0s 54ms/step - loss: 0.2663 - accuracy: 0.9242

[0.2662919759750366, 0.9242424368858337]

Evaluation

def evaluation(model):

test_steps_per_epoch = numpy.math.ceil(validation_iterator.samples / validation_iterator.batch_size)

predictions = model.predict(validation_iterator, steps=test_steps_per_epoch)

test_steps_per_epoch = numpy.math.ceil(validation_iterator.samples / validation_iterator.batch_size)

predicted_classes = numpy.argmax(predictions, axis=1)

true_classes = validation_iterator.classes

class_labels = list(validation_iterator.class_indices.keys())

report = classification_report(true_classes, predicted_classes, target_names=class_labels)

print(report)

cm=confusion_matrix(true_classes,predicted_classes)

print(cm)

evaluation(model)

precision recall f1-score support

Covid 0.36 0.35 0.35 26

Normal 0.28 0.25 0.26 20

Pneumonia 0.22 0.25 0.23 20

accuracy 0.29 66

macro avg 0.29 0.28 0.28 66

weighted avg 0.29 0.29 0.29 66

[[ 9 6 11]

[ 8 5 7]

[ 8 7 5]]

evaluation(model_2)

precision recall f1-score support

Covid 0.50 0.50 0.50 26

Normal 0.42 0.40 0.41 20

Pneumonia 0.33 0.35 0.34 20

accuracy 0.42 66

macro avg 0.42 0.42 0.42 66

weighted avg 0.43 0.42 0.42 66

[[13 6 7]

[ 5 8 7]

[ 8 5 7]]